How AI Research Summarizers Save Time and Enhance Academic Productivity

AI research summarizers condense complex scholarly documents into focused, actionable outputs by using natural language processing and large language model techniques to extract and synthesize key findings. This article explains what AI summarization is, how it works for research papers, and why it matters for academic productivity and knowledge management. Readers will learn the technical differences between extractive and abstractive approaches, concrete time-saving workflows, and practical criteria for choosing a research summarizer that preserves fidelity and supports deep thinking. We also map how multimodal inputs (text, audio, images, video) fit into literature reviews and drafting workflows, and we highlight emerging trends and ethical considerations shaping AI use in research in mid-2024. Finally, the piece shows how knowledge-mapping tools and AI thinking partners integrate into researcher workflows, with real-world vignettes illustrating hours saved per task and guidance for validating outputs. Throughout, keywords such as AI summarization, research summarizer, LLM summarization, and multimodal summarization are used to build semantic coherence and optimize for discoverability.

What Is AI Summarization and How Does It Work for Research Papers?

AI PDF summarizer visual mapping automated process of transforming dense scholarly text into concise, structured summaries that preserve the core claims, methods, and results. It works by preprocessing documents, creating semantic representations of sentences and sections, and then applying either extractive or abstractive techniques to produce outputs tuned for fidelity or synthesis. The mechanism relies on NLP pipelines—tokenization, embedding, attention—and LLM reasoning to map relationships between hypotheses, methods, and findings. These summaries reduce cognitive load and speed triage so researchers can prioritize reading and follow-up experiments. Understanding these components clarifies trade-offs and helps researchers choose the right summarization approach for their workflow.

How Do AI Tools Use NLP and Large Language Models to Summarize Documents?

AI tools begin by parsing and preprocessing scholarly text, which includes tokenization, section detection (abstract, methods, results), and entity recognition to identify authors, datasets, and metrics. AI text analysis transform sentences into semantic vectors, enabling similarity comparisons and clustering across multiple documents for theme detection. LLMs then use attention mechanisms and context windows to synthesize cross-document evidence, apply paraphrasing for clarity, and generate narrative summaries or bullet points as required. This pipeline supports retrieval-augmented generation (RAG) patterns where external citations are reinserted into model outputs to ground summaries in source passages. Together, these steps let tools produce summaries that map claims to evidence, which speeds validation and downstream writing.

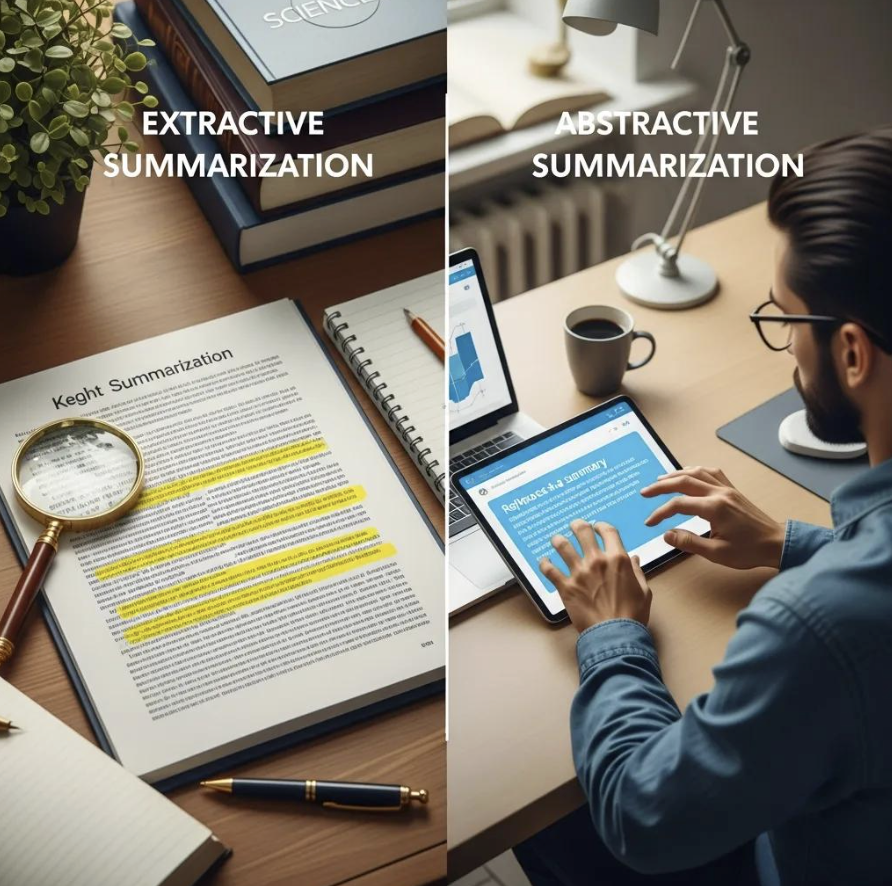

What Are Extractive vs. Abstractive Summarization Methods in AI Research Tools?

Extractive summarization compiles verbatim sentences or phrases from source text that most closely match the document's salient points, preserving exact wording and high fidelity to original claims. Best AI academic research synthesis rewrites content to produce coherent narratives that integrate findings across sections or papers, offering streamlined interpretation at some risk of paraphrase-induced error. Extractive outputs are valuable when precise quotations and source fidelity matter, while abstractive outputs help when narrative synthesis and hypothesis generation are the priority. Balancing these approaches—often through hybrid pipelines—lets researchers get both accurate evidence and higher-level insight without spending many hours re-reading full texts.

How Does AI Summarization Save Time for Researchers and Knowledge Workers?

AI summarization saves researchers time by automating routine triage, extracting methods and metrics, and mapping cross-study findings so that key decisions happen faster and with less manual synthesis. In practice, summarizers convert long PDFs and supplementary materials into digestible summaries, highlight experimental parameters, and produce structured outputs that feed into literature reviews and lab notes. These capabilities reduce the total reading load while increasing the speed of insight generation, freeing researchers to focus on interpretation and design rather than mechanical extraction. Practical workflows show how summarizers can shift time from scanning to synthesis, enabling teams to reallocate hours toward experiments and writing.

What Are the Main Time-Saving Benefits of AI Research Summarizers?

AI summarizers accelerate screening, condense methodological details, and surface outcome metrics that researchers would otherwise extract manually, delivering measurable time savings across common tasks. For example, batch-screening a set of 50 abstracts for relevance can move from hours of manual review to minutes of high-confidence triage, and automatic extraction of methods and key results speeds inclusion in a literature matrix. Summaries also enable quicker identification of replicable parameters and citation extraction for writing. These features collectively reduce repetitive work and allow teams to allocate more time to hypothesis testing and critical interpretation.

AI summarizers speed initial screening of literature to minutes rather than hours.

They extract experimental parameters and results into structured fields for faster comparison.

Summaries enable rapid synthesis across dozens of papers for literature mapping.

This combination of automated triage and structured extraction reallocates researcher time from data hunting to analysis and idea generation, increasing overall productivity without sacrificing thoroughness.

Introductory explanation: the table below compares common summarization outputs and their practical trade-offs to help researchers choose an approach that balances speed and fidelity.

Summary Type | Characteristic | Best Use Case |

|---|---|---|

Extractive Summary | High fidelity to source wording and citations | When exact quotes and provenance are required |

Abstractive Narrative | Synthesized, paraphrased findings | When creating cohesive literature overviews |

Hybrid Summary | Mix of extracts + synthesized commentary | Balanced needs: speed and interpretive context |

What Unique Features Does Ponder AI Offer for Deep Thinking and Research Summarization?

Ponder positions itself as an all-in-one knowledge workspace that supports deep thinking by combining a flexible canvas for organizing ideas with AI assistance that surfaces connections across documents. Its offering emphasizes an AI thinking partnership—an agent that helps users identify blind spots, propose hypotheses, and structure insights on a shared workspace. The platform accepts diverse file formats and enables secure processing through third-party enterprise models rather than training on user data, aligning with researcher concerns about confidentiality. These capabilities illustrate how a knowledge-mapping environment can complement summarization by turning extracted facts into traceable insight and follow-up tasks.

How Does the Ponder Agent Assist with Knowledge Mapping and Insight Generation?

The Ponder Agent operates as an AI thinking partner that synthesizes uploaded materials into thematic maps and suggested next steps, helping researchers move from scattered notes to organized insight. In a typical vignette, a user imports multiple PDFs and datasets; the agent highlights cross-document patterns, proposes research questions, and recommends underexplored citations to follow up. It can export structured insight lists and knowledge maps that teams use for planning experiments or drafting literature sections. This workflow shortens the time from discovery to action by turning summarized evidence into prioritized research tasks and exportable artifacts.

Introductory explanation: the following table outlines Ponder-specific capabilities and how they map to researcher needs.

Capability | Attribute | Value |

|---|---|---|

Ponder Agent | Function | Knowledge mapping & insight suggestions |

Infinite Canvas | Use | Flexible organization of ideas and connections |

Multimodal Import | Supported formats | Documents, PDFs, audio, video, images, web pages |

Security Model | Policy | Uses third-party enterprise models; user data not used for model training |

How Does Ponder AI Support Multimodal Input and Secure AI Processing?

Ponder accepts multimodal inputs, enabling researchers to synthesize evidence that appears outside traditional text—such as recorded interviews, conference videos, and annotated images—so that non-textual findings become part of a unified knowledge map. Multimodal summarization matters because many research projects rely on diverse evidence types that must be integrated into the literature narrative and methods synthesis. Ponder’s approach includes secure third-party AI integrations with providers like modern LLMs while asserting that user files are not used for training and that employee access to files is restricted. These assurances address confidentiality needs while enabling advanced model capabilities for complex, multimodal summarization.

This section shows how multimodal processing and security assurances together let researchers decide when to use third-party AI for richer synthesis while keeping sensitive material controlled.

To understand the full scope of Ponder's capabilities and how they align with different research needs, exploring its various subscription plans can be beneficial. This ensures teams can select the right tier for their specific requirements.

How Can AI Tools Streamline Literature Reviews and Academic Writing?

AI tools streamline literature reviews by clustering themes, extracting citations and claims, and generating structured synthesis drafts that researchers can refine and validate. These tools can auto-cluster a corpus to reveal under-researched areas, build evidence matrices linking claims to sources, and produce first-draft narratives that incorporate extracted methods and results. In writing workflows, summarizers speed outline creation, suggest paragraph-level syntheses, and help format citations for quick insertion. When used responsibly, AI accelerates the cyclical work of review, synthesis, and drafting, converting previously manual steps into guided, verifiable processes.

What AI Features Help Identify Research Gaps and Synthesize Sources?

Key AI features that reveal gaps and synthesize evidence include topic modeling for thematic distributions, citation extraction for mapping influence chains, and entity recognition for identifying repeated measures or experimental conditions. A practical sequence is to ingest a corpus, run auto-clustering to surface dominant themes, then request targeted summaries for underrepresented themes to validate potential gaps. These features produce structured outputs—tables, evidence lists, and narrative paragraphs—that make gap identification transparent and reproducible. By automating theme detection and evidence linking, AI reduces the time from broad survey to targeted research question formulation.

Topic modeling surfaces the distribution of themes across a literature corpus.

Citation extraction builds maps of influential works and methodological lineages.

Entity recognition identifies recurring measures and experimental parameters.

These automated steps let researchers focus their attention on interpretation and hypothesis development rather than exhaustive manual mapping.

Research in specialized fields, such as clinical studies, highlights the importance of factual consistency in AI-generated summaries.

AI assistants facilitate drafting by generating outlines and first-pass text that researchers can edit for voice and rigor, and they support editing with clarity, concision, and format suggestions tuned to academic norms. Many systems extract citations into structured bibliographic entries and can suggest in-text citation placements tied to summarized evidence, easing tedious formatting tasks. A recommended workflow is outline generation, AI-assisted draft creation, manual verification against source passages, and final editing for tone and accuracy. Researchers must validate AI outputs against originals to prevent attribution errors, but when combined with careful review, these assistants compress multiple writing stages into fewer iterative passes.

This approach reduces repetitive drafting time and accelerates the transition from notes to polished manuscript-ready drafts while preserving scholarly standards.

What Should You Look for When Choosing an AI Research Summarizer?

Choosing an AI research summarizer requires evaluating accuracy, customization, integration with your workflow, multimodal support, and data security to ensure the tool both saves time and preserves research integrity. Accuracy matters most—look for systems that link summaries back to source passages or provide extractive evidence to enable quick verification. Customization options let you tune summary length, style, and domain-specific vocabulary, while integrations (export formats, citation managers, APIs) determine how smoothly outputs fit into existing processes. Security and privacy safeguards, such as encryption, access controls, and assurances about model training, are essential when handling unpublished or sensitive research materials.

Introductory explanation: the table below compares key selection criteria and why they matter, helping teams make informed decisions when evaluating tools.

Criterion | Metric | Why It Matters |

|---|---|---|

Accuracy | Source linking & extractive evidence | Ensures summaries can be validated against original text |

Customization | Summary length/style options | Aligns outputs with discipline conventions and reviewer expectations |

Integration | Export formats & APIs | Preserves workflow continuity and reduces manual transfer costs |

Security | Data handling & model policies | Protects unpublished research and complies with institutional rules |

How Important Are Accuracy, Customization, and Integration in AI Summarization Tools?

Accuracy is critical because research decisions depend on correct interpretation of methods and results; test tools by comparing AI summaries against abstracts and conclusions to measure fidelity. Customization enables discipline-specific phrasing and length control, which is important when moving from broad summaries to journal-ready prose or grant narratives. Integration matters because exportable formats, citation compatibility, and API access reduce friction when transferring AI outputs into bibliographic managers, lab notebooks, or collaboration platforms. A sensible validation protocol includes spot-checking summaries, using extractive traces for verification, and ensuring exports maintain provenance metadata.

Test accuracy by comparing AI outputs to source abstracts and key ps.

Evaluate customization through adjustable length and style presets.

Check integration by exporting sample summaries into your citation manager or knowledge base.

These checks make tool selection systematic and reduce risk when adopting summarization into core research processes.

Why Is Data Security and Privacy Critical in AI Research Assistants?

Research data often includes unpublished findings, sensitive human-subject data, or proprietary methods, making data security central when using third-party summarization services. Essential security features to request include end-to-end encryption, granular access controls, contractual assurances that data will not be used to train public models, and independent compliance documentation such as SOC reports or policy statements. Validating vendor claims involves reviewing documentation, asking about model hosting (enterprise third-party vs. public endpoints), and confirming role-based access to stored files. These measures protect intellectual property and uphold institutional and ethical obligations for data stewardship.

This security focus ensures that time-saving AI tools do not introduce unacceptable disclosure risks into sensitive research workflows.

What Are the Future Trends and Opportunities for AI in Research and Knowledge Management?

Future trends point to tighter integration between generative AI, continuous learning pipelines, and multimodal reasoning, enabling richer synthesis across diverse evidence types and more personalized summarization aligned to individual research agendas. Retrieval-augmented generation (RAG) and improved grounding techniques will reduce hallucinations by tying model outputs to explicit source passages, while adaptive fine-tuning and user-specific memory will allow summarizers to learn domain preferences without compromising privacy. As tools evolve, knowledge graphs and canvas-style workspaces will make long-term insight accumulation and hypothesis tracking more systematic for research teams. These trends promise enhanced productivity but also require governance to maintain reproducibility and attribution.

How Will Generative AI and Continuous Learning Impact Research Summarization?

Generative AI combined with RAG workflows will make summaries more grounded and context-aware by retrieving exact evidence snippets before generating narrative syntheses, improving factual fidelity and traceability. Continuous learning—where models adapt to a user’s domain vocabulary and preferred structures—will deliver more relevant and time-saving outputs that align with lab conventions and disciplinary norms. Multimodal LLMs capable of reasoning across text, tables, images, and audio will enable integrated literature reviews that incorporate conference talks or lab recordings into synthesized insight. These developments will reduce manual alignment work and let researchers iterate faster on designs and manuscripts.

This technical evolution points to faster, more trustworthy summaries that fit tighter into researcher workflows and support long-term knowledge accumulation.

What Ethical Considerations Are Growing in AI-Assisted Academic Research?

Ethical considerations include attribution of AI-generated content, the risk of hallucinated claims, reproducibility of AI-assisted syntheses, and compliance with publication norms that increasingly require transparency about AI use. Best practices advise always linking summaries to source passages, documenting AI prompts and verification steps, and acknowledging AI assistance consistent with journal policies. Researchers should adopt verification workflows that combine automated extractive traces with human review and maintain auditable provenance metadata for claims derived from AI outputs. Institutions will likely formalize policies around permissible AI use in manuscripts and peer review, making transparent practices an essential part of responsible adoption.

For readers interested in tools that combine knowledge mapping with secure AI integration and an emphasis on deep thinking, Ponder offers an all-in-one knowledge workspace and AI thinking partnership designed to help researchers synthesize multimodal inputs while maintaining data control and provenance. Exploring vendor materials and demos can help teams evaluate how these capabilities align with institutional security needs and research workflows.